In the pursuit of a story, journalists are often required to protect the identity of their source. Many of the most impactful works of journalism have relied upon such an arrangement, yet the balancing act between publishing information that is vital to a story and protecting the person behind that information can present untold challenges, especially when the personal safety of the source is at risk.

These challenges are particularly heightened in this age of omnipresent data collection. Advances in computing technology have enabled large volumes of data processing, which in turn promotes efforts to monetise data or use it for surveillance. In many cases, the privacy of individuals is seen as an obstacle, rather than an essential requirement. Recent history is peppered with examples of privacy violations, ranging from Cambridge Analytica’s use of personal data for ad targeting to invasive data tracking by smart devices. The very expectation of privacy protection seems to be withering away in the wake of ongoing data leaks and data breaches.

With more data available than ever before, journalists are also increasingly relying on it in their reporting. But, just as with confidential sources, they need to be able to evaluate what information to publish without revealing unnecessary personal details. While some personal information may be required, it’s likely that most stories can be published without needing to identify all individuals in a dataset. In these cases, journalists can use various methods to protect these individuals’ privacy, through processes known as de-identification or anonymisation.

To help journalists champion responsible and privacy-centric data practices, this Long Read will cover how to:

- identify personal information

- evaluate the risks associated with publishing personal information

- utilise different de-identification methods in data journalism.

Defining personal information

While the definition of what constitutes personal information has become more formalised through legal reform in the late 2000s, it has long been the role of journalists to uncover if a release of data, whether intended or accidental, jeopardises the privacy of individuals. After AOL published millions of online search queries in 2006, journalists were able to piece together individual identities solely based on individuals’ search histories, including sensitive information about some individuals’ health statuses and dating preferences. Similarly, in the wake of Edward Snowden’s revelations of NSA spying, various researchers have shown how communication metadata -- information generated by our devices -- can be used to identify users, or serve as an instrument of surveillance.

But, when using a dataset as a source in a story, journalists are put in the new position of having to evaluate the sensitivity of the information at hand themselves. And this assessment starts with understanding what is and isn’t personal information.

Personally identifiable information (PII), legally described as ‘personal data’ in Europe or ‘personal information’ in some other jurisdictions, is generally understood as anything that can directly identify an individual, although it is important to note that PII exists along a spectrum of both identifiability and sensitivity. For instance, names or email addresses have a high value in terms of identifiability, but a relatively low sensitivity, as their publication generally doesn’t endanger an individual. Location data or a personal health record may have lower identifiability, but a higher degree of sensitivity. For illustration purposes, we can plot various types of PIII along the sensitivity and identifiability spectrums.

PII exists along a spectrum of sensitivity and identifiability.

The degree to which information is personally identifiable or sensitive depends on both context and the compounding effect of data mixing. A person’s name may carry a low risk in a dataset of Facebook fans, but if the name is on a list of political dissidents, then the risk of publishing that information increases dramatically. The value of information also changes when combined with other data. On its own, a dataset that contains purchase history may be difficult to link to any given individual; however, when combined with location information or credit card numbers, it can reach higher degrees of both identifiability and sensitivity.

In a 2016 case, the Australian Department of Health published de-identified pharmaceutical data for research purposes, only to have academics decrypt one of the de-identified fields. This created the potential for personal information to be exposed, prompting an investigation by the Australian Privacy Commissioner. In another example, Buzzfeed journalists investigating fraud among pro tennis players in 2016 published the anonymised data that they used in their reporting. However, a group of undergraduate students was able to re-identify the affected tennis players by using publicly available data. As these examples illustrate, a journalist’s ability to determine the personal nature of a dataset requires a careful evaluation of both the information it contains, and also the information that may already be publicly available.

While these tennis players’ names may appear anonymous, BuzzFeed’s open-source methodology also included other data which allowed for the possibility of re-identification.

What is de-identification?

In order to conceal the identity of a source, a journalist may infer anonymity or use a pseudonym, such as Deep Throat in the case of the Watergate scandal. When working with information, the process of removing personal details is called de-identification or, in some jurisdictions, anonymisation. Long before the internet, data de-identification techniques were employed by journalists, for example by redacting names from leaked documents. Today, journalists are armed with new de-identification methods and tools for protecting privacy in digital environments, which make it easier to analyse and manipulate ever larger amounts of data.

The goal of de-identifying data is to avoid possible re-identification, in other words, to anonymise data so that it cannot be used to identify an individual. While some legal definitions of data anonymisation exist, the regulation and enforcement of de-identification is usually handled on an ad-hoc, industry-specific basis. For instance, health records in the United States must comply with the Health Insurance Portability and Accountability Act (HIPAA), which requires the anonymisation of direct identifiers, such as names, addresses, and social security numbers, before data can be published for public consumption. In the European Union, the General Data Protection Regulation (GDPR) enforces anonymisation of both direct identifiers, such as names, addresses, and emails, as well as indirect identifiers, such as job titles and postal codes.

In developing their story, journalists have to decide what information is vital to a story and what can be omitted. Often, the more valuable a piece of information, the more sensitive it is. For example, health researchers need to be able to access diagnostic or other medical data, even though that data can have a high degree of sensitivity if it is linked to a given individual. To strike the right balance between data usefulness and sensitivity, when deciding what to publish, journalists can choose from a range of de-identification techniques.

An example of a redacted CIA document. Source: Wikimedia.

Data redaction

The simplest way to de-identify a dataset is to remove or redact any personal or sensitive data. While an obvious drawback is the possible loss of the data’s informative value, redaction is most commonly used to deal with direct identifiers, such as names, addresses, or social security numbers, which usually don’t represent the crux of a story.

That said, technological advances and the growing availability of data will continue to increase the identifiability potential of indirect identifiers, so journalists shouldn’t rely on data redaction as their only means of de-identification.

Pseudonymisation

In some cases, removing information outright limits the usefulness of the data. Pseudonymisation offers a possible solution, by replacing identifiable data with pseudonyms that are generated either randomly, or by an algorithm. The most common techniques for pseudonymisation are hashing and encryption. Hashing relies on mathematical functions to convert data into unreadable hashes. Encryption, on the other hand, relies on a two-way algorithmic transformation of the data. The primary difference between the two methods is that encrypted data can be decrypted with the right key, whereas hashed information is non-reversible. Many databases systems, such as MySQL and PostgreSQL, enable both the hashing and encryption of data.

Data pseudonymisation played an important role in the Offshore Leaks investigation by the International Center for Investigative Journalism (ICIJ). Given the vast volume of data that needed to be processed, journalists relied on unique codes associated with each individual and entity that appeared in the leaked documents. These pseudonymised codes were used to show links between leaked documents, even in cases when the names of individuals and entities didn’t match.

Information is considered pseudonymised if it can no longer be linked to an individual without the use of additional data. At the same time, the ability to combine pseudonymised data with other datasets renders pseudonymisation a possibly weak method of de-identification. Even by using the same pseudonym repeatedly throughout a dataset, its effectiveness can decrease, as the potential for finding relationships between variables grows with every occurrence of the pseudonym. Finally, in some cases, the very algorithms used to create pseudonyms can be cracked by third parties, or have inherent security vulnerabilities. Therefore, journalists should be careful when using pseudonymisation to hide personal data.

In 2013, Jonathan Armoza identified taxi trips made by celebrities Bradley Cooper and Jessica Alba from a dataset of New York taxi rides, where the taxi’s license and medallion numbers were supposedly hashed. To crack the code, he simply searched images of celebrities getting out of taxis and combined it with other information available in the dataset.

Statistical noise

Since both data redaction and pseudonymisation carry the risk of re-identification, they are often combined with statistical noise methods, such as k-anonymization. These ensure that at least a set number of individuals share the same indirect identifiers, thereby obscuring the process of re-identification. As a best practice, there should be no less than 10 entries with unique combinations of identifiers. Common techniques for introducing statistical noise into a dataset are generalisation, such as replacing the name of a country with a continent, and bucketing, which is the conversion of numbers into ranges. In addition, data redaction and pseudonymisation are often used with statistical noise techniques to ensure that no unique combinations of identifiers exist in a dataset. In the following example, data in certain columns is generalised or redacted to prevent re-identification of individual entries.

Adding statistical noise to prevent re-identification.

Data aggregation

When the integrity of raw data doesn’t need to be preserved, journalists can rely on data aggregation as a method for de-identification. Instead of publishing the complete dataset, data can be published in the form of summaries that omit any direct or indirect identifiers. The principal concern with data aggregation is ensuring that the smallest segments of the aggregated data are large enough, so as to not reveal specific individuals. This is particularly relevant when multiple dimensions of aggregated data can be combined, as in the case study below.

Case study: Mozilla’s Facebook Survey

Following the Cambridge Analytica scandal, the Mozilla Foundation conducted a survey of internet users about their attitudes toward Facebook. In addition to their attitudes, respondents were asked for information about their age, country of residence, and digital proficiency. The survey tool also recorded IP addresses of users, as well as other metadata, such as the device used and time of submission.

These responses were made available via an interactive tool, which allowed audiences to closely examine the data, including through the ability to cross-tabulate results by demographic criteria, like age or country. But Mozilla also wanted to release all of the survey data to the public for further analysis, so a careful approach to de-identification was required.

To begin the de-identification process, Mozilla removed all communication metadata that wasn’t required to complete the analysis. For instance, IP addresses of respondents, as well as the time of submissions, were scrubbed from the dataset. The survey didn’t record direct identifiers, such as names or email addresses, so no redaction or pseudonymisation was required. Even though the survey included over 46,000 responses, the data included certain combinations of indirect identifiers, such as country and age information, that allowed users to zoom in on small samples of the respondents. Since this increased the risk of re-identification, all countries with less than 700 respondents were bundled into an ‘other’ category, which added sufficient statistical noise to the data.

Despite these efforts, Mozilla’s privacy and legal teams remained cautious about publishing the data, since its global character implied possible legal liability in various jurisdictions. But, in the end, the value of publishing the data outweighed any remaining privacy concerns.

De-identification workflows for journalists

For journalists on a deadline, de-identification may appear to play second fiddle to more substantive decisions, such as assessing data quality or deciding how to visualise a dataset. But ensuring the privacy of individuals should nevertheless have a firm place in the journalistic process, especially since improper handling of personal data can undermine the very credibility of the piece. Legal liability under privacy laws may also be of concern if the publication is responsible for data collection or processing. Therefore, data journalists should take the following steps to incorporate de-identification into their workflows:

1. Does my dataset include personal information?

It may be the case that the dataset you are working with includes weather data, or publicly available sport statistics, which absolves you from the need to worry about de-identification. In other cases, the presence of names or social security numbers will quickly make any privacy risks apparent. Often, however, determining whether data is personally identifiable may require a closer examination. This is particularly the case when working with leaked data, as explained in this Long Read by Susan McGregor and Alice Brennan. Aside from noting the presence of any direct identifiers, journalists should pay close attention to indirect identifiers, such as IP addresses, job information, and geographical records. As a rule of thumb, any information relating to a person should be considered a privacy risk and processed accordingly.

2. How sensitive and identifiable is the data?

As explained above, personal information carries different risks based on the context in which it exists, including whether it can be combined with other data. This means that journalists need to evaluate two things: 1) how identifiable a piece of data is and 2) how sensitive it is to the privacy of an individual. Ask yourself: Will an individual’s association with the story endanger their safety or reputation? Can the data at hand be combined with other available datasets that may expose an individual’s identity? If so, do the benefits of publishing this data outweigh the associated privacy risks? A case by case approach is required to balance the public interest in publishing with the privacy risks of revealing personal details.

3. How will the data be published?

A journalist writing for a print publication in the pre-internet era didn’t have to worry about how data would be disclosed, as printed charts and statistics do not allow for the further querying of their underlying data. However, at the cutting edge of data journalism today, sophisticated tools and interactive visualisations enable audiences to undertake a detailed examination of the data used in a particular story. For example, many journalists opt for an open source approach, with code and data shared on Github. To open source with privacy in mind, all data needs to be carefully scrubbed of personal information. When it comes to visualisations, some journalists protect privacy by leveraging pre-aggregated data, which obfuscates the original dataset. But it’s important to check whether these aggregated samples exceed a minimum threshold of identifiability.

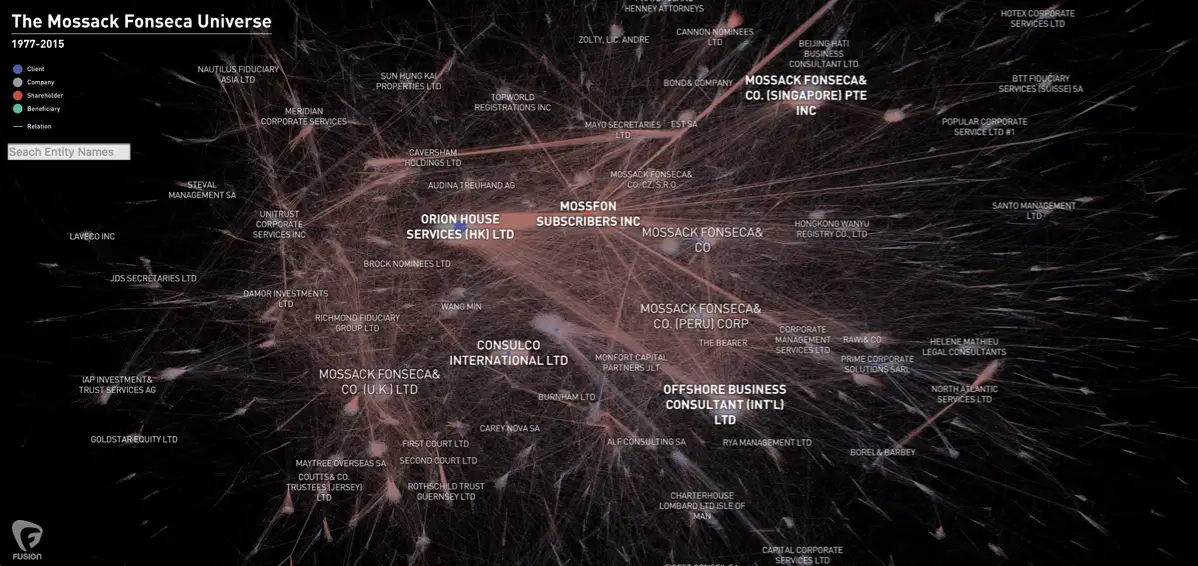

Fusion uses a visualisation of interrelated entities to illustrate network investigated as part of the Panama Papers, while still maintaining privacy boundaries on the data.

4. Which de-identification technique is right for your data?

Journalists will often need to deploy a combination of de-identification techniques to best suit the data at hand. For direct identifiers, data redactions and pseudonymisation -- if properly implemented -- usually suffice in protecting the privacy of individuals. For indirect identifiers, consider adding some statistical noise by grouping data into buckets or generalising information that may not be vital to the story. Data aggregation is the best option for highly sensitive data, although journalists still have to ensure that there is a broad enough range of data and sufficiently uniform distribution in the aggregated variables to ensure that no personal information is inadvertently exposed.

Leading by example

Once data is available online, there is no possibility for revisions or corrections. Even if you consider that your dataset has been scrubbed of any personal details, there remains a risk that someone, somewhere may combine your data with another source to re-identify individuals, or crack your pseudonymisation algorithm and expose the personal information that it contains. As always, the risks of re-identification will continue to increase with the development of new technologies, such as machine learning and pattern recognition, which enable unanticipated ways of combining and transforming data.

Remember that seemingly impersonal data points may be used for identification purposes when combined with the right data. When Netflix announced its notorious Netflix prize for the best recommendation algorithm, the available data was scrubbed of any personal identifiers. But again, researchers were able to cross-reference personal movie preferences with data from IMDb.com and other online sources to identify individuals in the ‘anonymised’ Netflix dataset.

Despite the limitations of today’s de-identification methods, journalists should always be diligent in their efforts to protect the privacy of individuals. Whether it is by concealing the identity of their source, or by de-identifying personal information behind their stories.

Leading by example, the ICIJ handles vast volumes of personal data with privacy front of mind. When reporting on the Panama Papers, journalists both protected the anonymity of the source of the leak, by using the pseudonym John Doe, and carefully evaluated how to publish the private information within the leaked documents. There is no reason why journalists of all backgrounds can’t take similar steps to strike a balance between privacy and the public interest in their reporting.

And there are many examples of possible fallouts from disclosure of personal data when privacy conscious steps aren’t taken, from personal tragedies following the Ashley Madison leak, or the vast exposure of sensitive data associated with Wikileaks. Data journalists should strive to avoid the same pitfalls and instead promote responsible data practices in their reporting at all times.

De-identification for data journalists - Getting important stories into the open, without revealing unnecessary personal details

15 min Click to comment